About Me

I'm a Visiting Researcher at Google working on problems involving geospatial modeling and network-level

optimization of urban transportation networks.

Prior to this, I received my Ph.D. at the University of

California, Berkeley under the mentorship of Prof.

Alexandre M. Bayen,

in which I explored methods for efficiently adapting deep reinforcement learning to the problem of

mixed-autonomy traffic.

During this period, I took part in the first

large-scale field experiment

aimed at

demonstrating the ability of intelligently designed automated driving systems to reduce traffic congestion.

I am interested in problems that aim to adapt machine learning techniques to new or unexplored problems,

and particularly enjoy working in interdisciplinary groups which motivate the use of modern machine learning

methods to exciting new fields.

Projects

Geospatial reasoning and foundational models

Developing machine learning solutions to different geospatial problems in transportation networks.

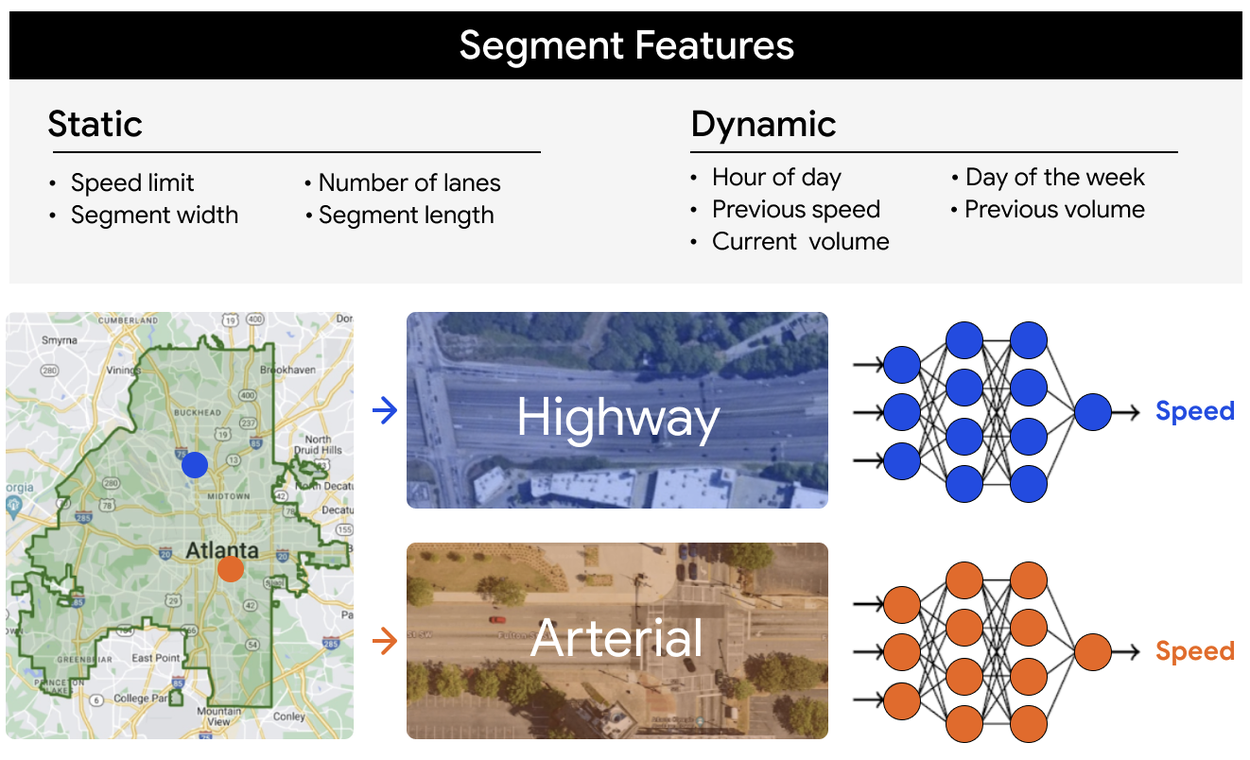

- Scalable Learning of Segment-Level Traffic Congestion Functions. S. Choudhury, A. Kreidieh, I. Tsogsuren, N. Arora, C. Osorio, and A. Bayen. IEEE Conference on Intelligent Transportation Systems, 2024 Blog

CIRCLES

We conduct a live field experiment aimed at reducing traffic jams via connected and automated vehicles.

- Traffic control via connected and automated vehicles: An open-road field experiment with 100 cavs J. Lee, H. Wang, K. Jang, A. Hayat, M. Bunting, A. Alanqary, W. Barbour, Z. Fu, X. Gong, G. Gunter, S. Hornstein, A. Kreidieh, ... and A. Bayen. arXiv preprint arXiv:2402.17043, 2024 Website

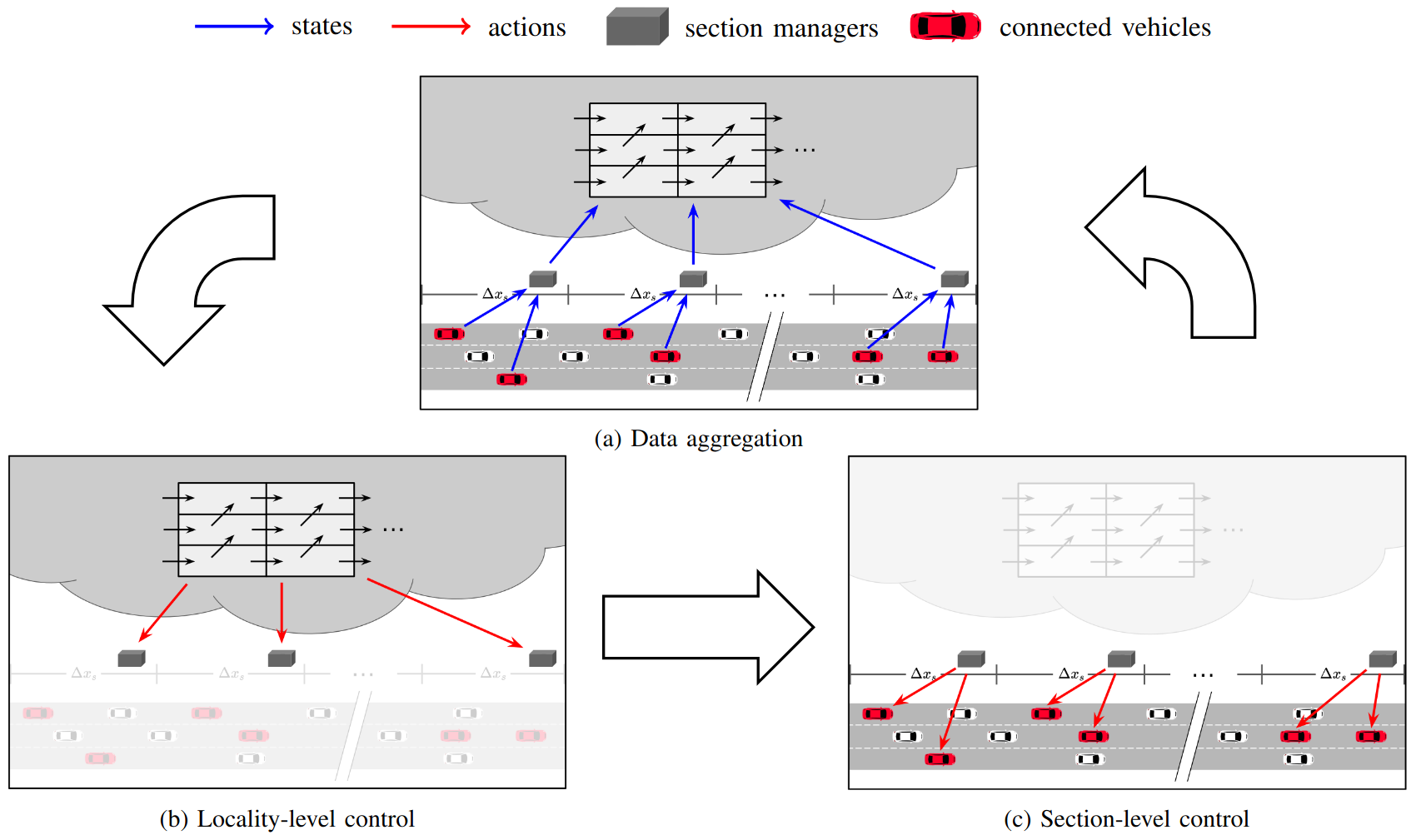

Lateral motion planning for automated vehicles

Formulating hierarchical decision-making processes for lane-assignment strategies, and learning best responses in congested regimes via reinforcement learning.

- Lane assignment of connected vehicles via a hierarchical system. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Conference on Intelligent Transportation Systems, 2022

- Lateral flow control of connected vehicles through deep reinforcement learning. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Conference on Intelligent Transportation Systems, 2022

- Non-local Evasive Overtaking of Downstream Incidents in Distributed Behavior Planning of Connected Vehicles. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Intelligent Vehicles Symposium (IV). 2021

Deep reinforcement learning for mixed autonomy traffic

Developing methods for adapting reinforcement learning to the problem of reducing traffic congestion via automated vehicles.

- Inter-Level Cooperation in Hierarchical Reinforcement Learning. A. Kreidieh, G. Berseth, B. Trabucco, S. Parajuli, S. Levine, and A. Bayen. arXiv preprint arXiv:1912.02368, 2019 Code

- Dissipating stop-and-go waves in closed and open networks via deep reinforcement learning. A. Kreidieh, and A. Bayen, 2018 IEEE conference on Intelligent Transportation Systems, Maui, HI, 2018, Page 732 Blog

Flow

A tool for simulating interactions between human-driven and autonomous agents in mixed-autonomy traffic flow settings.

- Flow: A modular learning framework for mixed autonomy traffic. C. Wu, A. Kreidieh, K. Parvate, E. Vinitsky, and A. Bayen. IEEE Transactions on Robotics, 2021 Blog Code Website

- Benchmarks for reinforcement learning in mixed-autonomy traffic. E. Vinitsky, A. Kreidieh, L. Le Flem, N. Kheterpal, K. Jang, C. Wu, F. Wu, R. Liaw, E. Liang, and A. Bayen. Conference on Robot Learning (pp. 399-409). PMLR, October 2018

Flow

Flow is a tool for simulating interactions between human-driven and autonomous agents in mixed-autonomy traffic flow settings. It combines popular traffic microsimulators (sumo, Aimsum) and reinforcement learning libraries (RLlib, stable-baselines, etc.) within an MDP formalism of traffic whereby features such as network geometry and agent behaviors may be flexibly assigned. You can learn more about these findings and explore the framework for yourself via the links and papers below.

Webpage: https://flow-project.github.io/

Code: https://github.com/flow-project/flow

Selected publications:

- Flow: A modular learning framework for mixed autonomy traffic. C. Wu, A. Kreidieh, K. Parvate, E. Vinitsky, and A. Bayen. IEEE Transactions on Robotics, 2021

- Benchmarks for reinforcement learning in mixed-autonomy traffic. E. Vinitsky, A. Kreidieh, L. Le Flem, N. Kheterpal, K. Jang, C. Wu, F. Wu, R. Liaw, E. Liang, and A. Bayen. Conference on Robot Learning (pp. 399-409). PMLR, October 2018

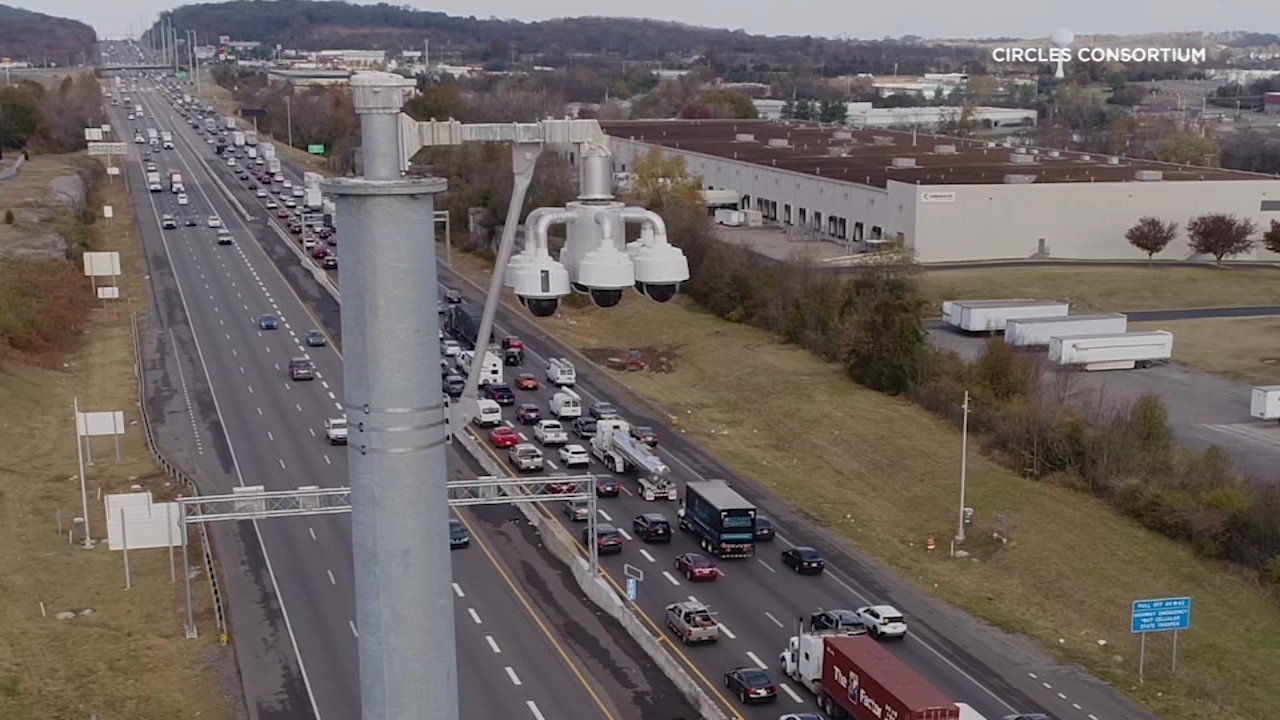

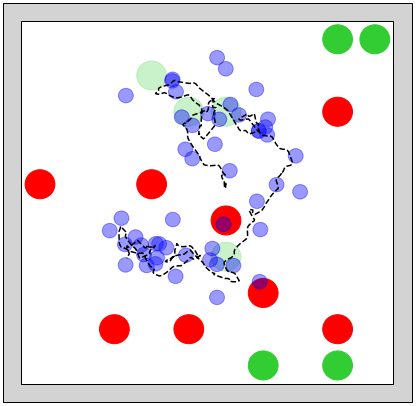

CIRCLES

CIRCLES is a multidisiplinary initiative aimed at demonstrating the efficacy of small penetrations of automated vehicles at reducing global energy emissions in congested highway networks. In November 2022, CIRCLES conducted the largest open-track traffic experiment to date, in which a fleet of 100 vehicles were deployed in Interstate I-24 within Nashville, Tennessee in an attempt to regulate human-driven factors to traffic congestion. You can read more about these efforts via the links below.

Webpage:

https://circles-consortium.github.io/

Selected publications:

- Learning energy-efficient driving behaviors by imitating experts. A. Kreidieh, Z. Fu and A. Bayen, IEEE Conference on Intelligent Transportation Systems, 2022

- Integrated Framework of Vehicle Dynamics, Instabilities, Energy Models, and Sparse Flow Smoothing Controllers. J.W. Lee, G. Gunter, R. Ramadan, S. Almatrudi, P. Arnold, J. Aquino, ... and B. Seibold. Proceedings of the Workshop on Data-Driven and Intelligent Cyber-Physical Systems (pp. 41-47), May 2021

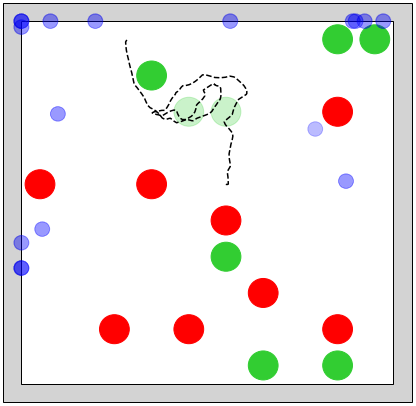

Inter-Level Cooperation in Hierarchical Reinforcement Learning

Agent Trajectory Goal Apple (+1) Bomb (-1)

Hierarchical RL techniques present promising methods for enabling structured exploration in complex long-term planning problems. Similar to convolutional models in computer vision and recurrents models in natural language processing, hierarchical models exploit the natural temporal structure of sequential decision-making problems, thereby accelerating learning in settings where feedback is sparse or delayed. Such systems, however, struggle when concurrently training all levels within a hierarchy, thereby hindering the flexibility and performance of existing algorithms. In this study, we draw connections between these and similar challenges in multi-agent RL, and hypothesize that increased cooperation between policy levels in a hierarchy may help ameliorate hierarchical credit assignment and exploration. We demonstrate that inducing cooperation accelerates learning and produces policies which display better transfer to new tasks, highlighting the benefits of our method in learning task-agnostic lower-level skills.

Webpage: https://sites.google.com/berkeley.edu/cooperative-hrl

Code: https://github.com/AboudyKreidieh/h-baselines

Selected publications:

- Inter-Level Cooperation in Hierarchical Reinforcement Learning. A. Kreidieh, G. Berseth, B. Trabucco, S. Parajuli, S. Levine, and A. Bayen. Journal of Machine Learning Research (in review), 2021

Journal Publications

Refereed journal publications (published, in press, accepted)

- Kernel-based planning and imitation learning control for flow smoothing in mixed autonomy traffic. Z. Fu, A. Alanqary, A. Kreidieh, and A. Bayen. Transportation Research Part C: Emerging Technologies, 2024

- Unified Automatic Control of Vehicular Systems With Reinforcement Learning. Z. Yan, A. Kreidieh, E. Vinitsky, A. Bayen, and C. Wu. IEEE Transactions on Automation Science and Engineering, 2022

- Flow: A modular learning framework for mixed autonomy traffic. C. Wu, A. Kreidieh, K. Parvate, E. Vinitsky, and A. Bayen. IEEE Transactions on Robotics, 2021

- Multi-receptive field graph convolutional neural networks for pedestrian detection. C. Shen, X. Zhao, X. Fan, X. Lian, F. Zhang, A. Kreidieh, and Z. Liu. IET Intelligent Transport Systems, 13(9), 1319-1328, 2019

Refereed journal publications (in review)

- Inter-Level Cooperation in Hierarchical Reinforcement Learning. A. Kreidieh, G. Berseth, B. Trabucco, S. Parajuli, S. Levine, and A. Bayen. Journal of Machine Learning Research (in review), 2021

Conference Publications

- Towards a Trajectory-powered Foundation Model of Mobility. S. Choudhury, A. Kreidieh, I. Kuznetsov, N. Arora, C. Osorio, and A. Bayen. Proceedings of the 3rd ACM SIGSPATIAL International Workshop on Spatial Big Data and AI for Industrial Applications, 2024

- Scalable Learning of Segment-Level Traffic Congestion Functions. S. Choudhury, A. Kreidieh, I. Tsogsuren, N. Arora, C. Osorio, and A. Bayen. IEEE Conference on Intelligent Transportation Systems, 2024

- Cooperative Driving for Speed Harmonization in Mixed-Traffic Environments. Z. Fu, A. Kreidieh, H. Wang, J. Lee, M. Monache, and A. Bayen. IEEE Intelligent Vehicles Symposium (IV). 2023

- Learning energy-efficient driving behaviors by imitating experts. A. Kreidieh, Z. Fu and A. Bayen. IEEE Conference on Intelligent Transportation Systems, 2022

- Lane assignment of connected vehicles via a hierarchical system. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Conference on Intelligent Transportation Systems, 2022

- Lateral flow control of connected vehicles through deep reinforcement learning. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Conference on Intelligent Transportation Systems, 2022

- Non-local Evasive Overtaking of Downstream Incidents in Distributed Behavior Planning of Connected Vehicles. A. Kreidieh, Y. Farid, and K. Oguchi. IEEE Intelligent Vehicles Symposium (IV). 2021

- Learning Generalizable Multi-Lane Mixed-Autonomy Behaviors in Single Lane Representations of Traffic. A. Kreidieh, Y. Zhao, S. Parajuli, and A. Bayen. International Conference on Autonomous Agents and Multiagent Systems, 2022

- Integrated Framework of Vehicle Dynamics, Instabilities, Energy Models, and Sparse Flow Smoothing Controllers. J.W. Lee, G. Gunter, R. Ramadan, S. Almatrudi, P. Arnold, J. Aquino, ... and B. Seibold. Proceedings of the Workshop on Data-Driven and Intelligent Cyber-Physical Systems (pp. 41-47), May 2021

- Continual Learning of Microscopic Traffic Models Using Neural Networks. YZ. Farid, AR. Kreidieh, F. Khaligi, H. Lobel, and A. Bayen, 100th Annual Meeting Transportation Research Board, Washington, DC, 2021

- Guardians of the Deep Fog: Failure-Resilient DNN Inference from Edge to Cloud. A. Yousefpour, S. Devic, B. Nguyen, A. Kreidieh, A. Liao, A. Bayen, and J. Jue, AIChallengeIoT’19: Proceedings of the First International Workshop on Challenges in Artificial Intelligence and Machine Learning for Internet of Things, pp. 25-31, New York, USA, Nov. 2019

- Lagrangian Control through Deep-RL: Applications to Bottleneck Decongestion. E. Vinitsky, K. Parvate, A. Kreidieh, C. Wu, Z. Hu and A. Bayen, IEEE Intelligent Transportation Systems Conference (ITSC), 2018

- Dissipating stop-and-go waves in closed and open networks via deep reinforcement learning. A. Kreidieh, and A. Bayen, 2018 IEEE conference on Intelligent Transportation Systems, Maui, HI, 2018, Page 732

- Benchmarks for reinforcement learning in mixed-autonomy traffic. E. Vinitsky, A. Kreidieh, L. Le Flem, N. Kheterpal, K. Jang, C. Wu, F. Wu, R. Liaw, E. Liang, and A. Bayen. Conference on Robot Learning (pp. 399-409). PMLR, October 2018

- Flow: Deep reinforcement learning for control in sumo. N. Kheterpal, K. Parvate, C. Wu, A. Kreidieh, E. Vinitsky, and A. Bayen. EPiC Series in Engineering, 2, 134-151, 2018

- Emergent behaviors in mixed-autonomy traffic. C. Wu, E. Vinitski, A. Kreidieh and A. Bayen, Conference on Robot Learning, 2017

- Multi-lane Reduction: A Stochastic Single-lane Model for Lane Changing. C. Wu, E. Vinitsky, A. Kreidieh and A. Bayen, IEEE Conference on Intelligent Transportation Systems, Apr. 2017

Workshops / Tutorials

Lagrangian Control for Traffic Flow Smoothing in Mixed Autonomy Settings

Organizers:

Alexandre Bayen, George J. Pappas, Benedetto Piccoli, Daniel B. Work, Jonathan Sprinkle, Maria Laura Delle

Monache, Benjamin Seibold, Cathy Wu, Abdul Rahman Kreidieh, Eugene Vinitsky, Yashar Zeiynali Farid

Time and Location: Conference of Decision-Making and Control (CDC), 2019

Webpage:

https://cdc2019.ieeecss.org/workshops.php

| Session | Title |

|---|---|

| 1 | Means Field Models |

| 2 | Deep Reinforcement Learning (RL) |

| 3 | Verification of Deep Neural Networks (DNNs) |

Tutorial on Deep Reinforcement Learning and Transportation

Organizers: Alexandre Bayen, Cathy Wu, Abdul Rahman Kreidieh, Eugene Vinitsky

Time and Location: IEEE International Conference on Intelligent Transportation Systems (ITSC), 2018

Webpage: https://ieee-itsc.org/2018/tutorials.html

| Session | Title | Slides |

|---|---|---|

| 1 | Welcome, opening remarks | Download |

| 2.a | Reinforcement learning | Download |

| 2.b | Q learning | Download |

| 3.a | Policy gradient methods | Download |

| 3.b | Non-policy gradient methods | Download |

| 4 | Deep RL from a transportation lens (model-based RL and inverse RL) | Download |

| 5.a | Tools of the trade (SUMO) | Download |

| 5.b | Tools of the trade (Flow) | Download |

| 5.c | Tools of the trade (Ray RLlib) | Download |

| 6 | Hands-on tutorial on //Flow | Download |

| 7 | Advanced topics in deep reinforcement learning (multi-agent RL, representation learning) | Download |

Teaching

EE290O | Deep multi-agent reinforcement learning with applications to autonomous traffic

Organizers: Alexandre Bayen, Eugene Vinitsky, Aboudy Kreidieh, Yashar Zeiynali Farid, Cathy Wu

Time and Location: University of California, Berkeley, Fall 2018

Webpage:

https://flow-project.github.io/EE290O/

Lecture / Homework Notebooks:

https://github.com/flow-project/EE290O

E7 | Intro to Computer Programming for Scientists and Engineers

Time and Location: University of California, Berkeley, Spring 2017

- Led lab sessions consisting of around 20 students and mentored their development.

- Formulated homework and exam problems in Matlab.